So, you want to run your own hypervisor using xenserver, but you want to have some of the flexibility of KVM too. This instructional guide explains how to install and configure KVM with virt-manager and with X11 forwarding. We will go step by step. In this case I am using a mac.

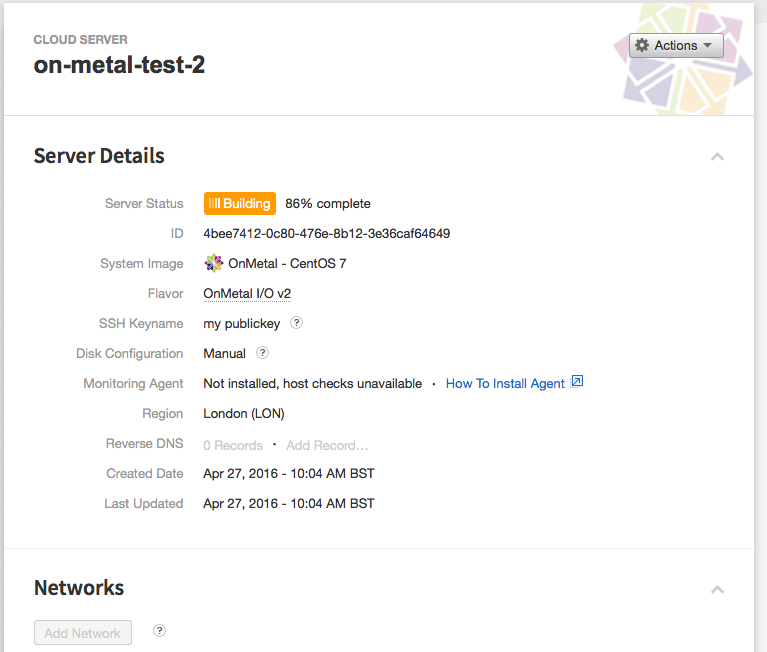

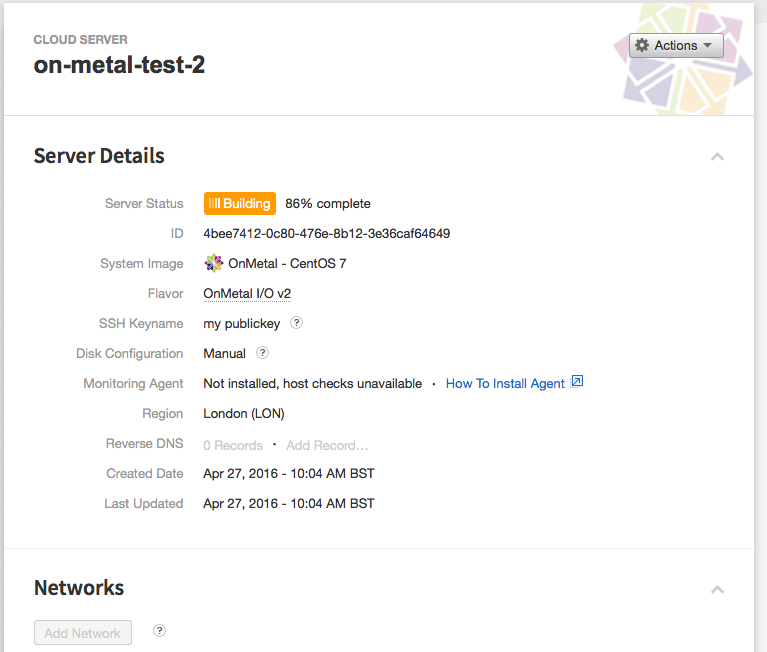

Step 1 – Create Rackspace onmetalv2 server

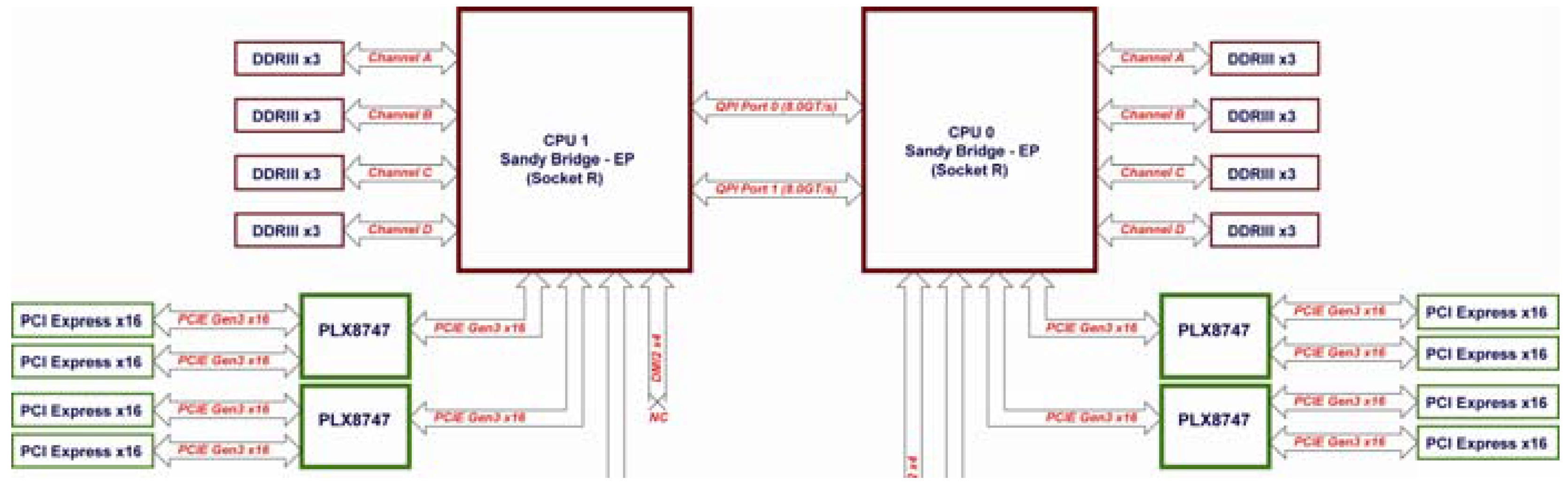

In this case I’ll be using a 40 cpu 128GB machine as the host utilizing the new onmetalv2 server range offered by Rackspace public cloud.

Please note that this is a bare metal server, not a cloud server, however it is offered by the same cloud platform at mycloud.rackspace.co.uk

Step 2 – Install and configure KVM

sudo yum update -y

sudo yum -y install kvm virt-manager libvirt virt-install qemu-kvm xauth dejavu-lgc-sans-fonts

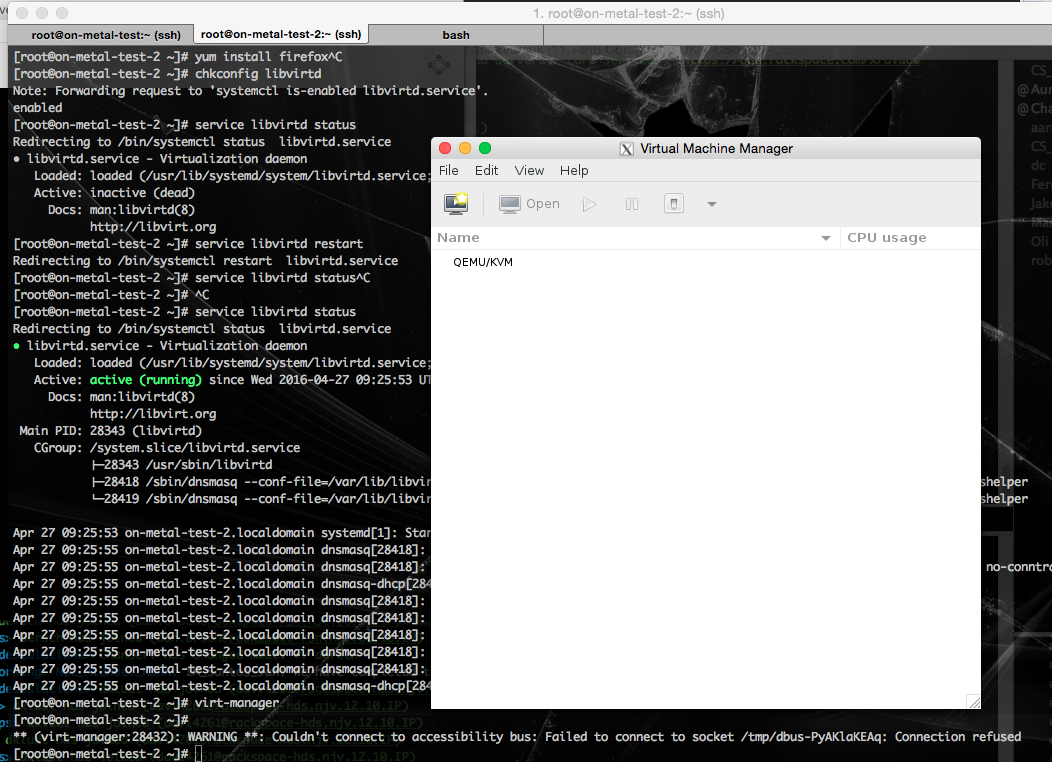

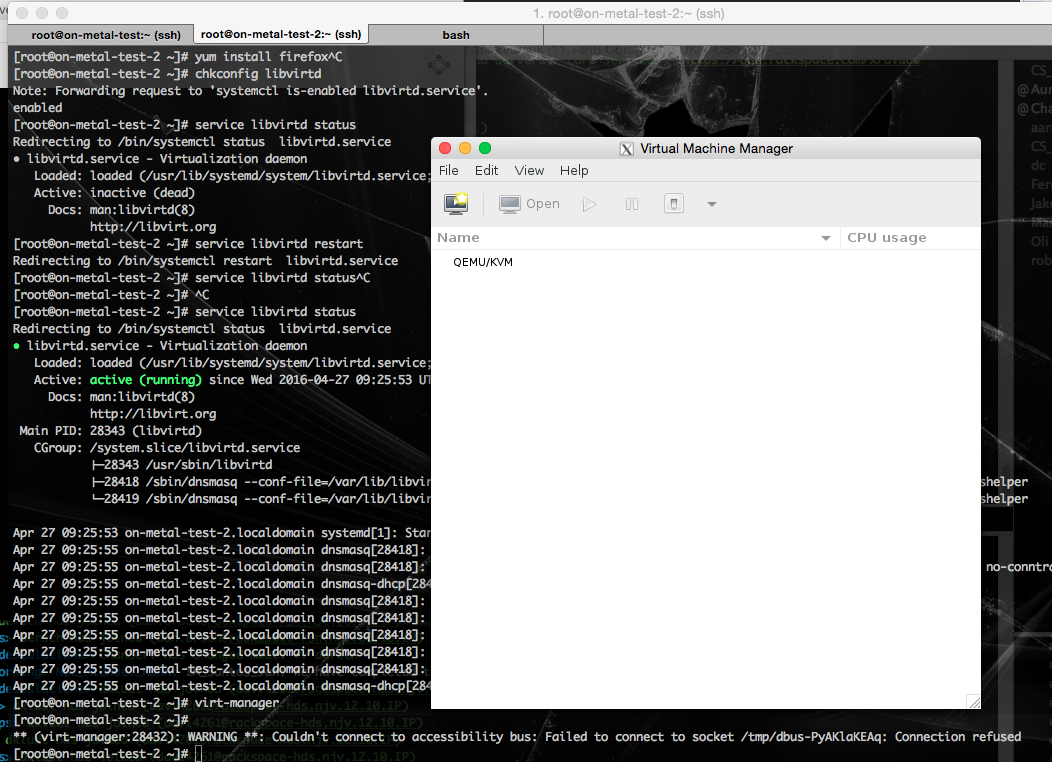

Step 3 – Start and configure libvirtd

chkconfig libvirtd

service libvirtd status

service libvirtd restart

service libvirtd status

Step 4 – MAC SYSTEMS – Install X Quartz

For mac users simply install X Quartz, which can be found at http://www.xquartz.org/

Step 4a – Windows Systems – Install Xming

Windows users can get in on the action too, using xming which can be found at https://sourceforge.net/projects/xming/

Step 5 – MAC SYSTEMS ONLY – Configure X11 Forwarding

Xming will work out of the box for windows, but for Mac users you need to make sure you have enabled X11 forwarding.

touch ~/.ssh/config

echo "ForwardX11 yes" >> ~/.ssh/config

This simply allows X11 forwarding for Mac users which needs to be done at the client side. Then you can virtualize any application you like on the client, but running the application such as firefox , or even a virtual machine on the remote server. SSHv2 is beautiful. That’s it you’ve completed the most important steps.

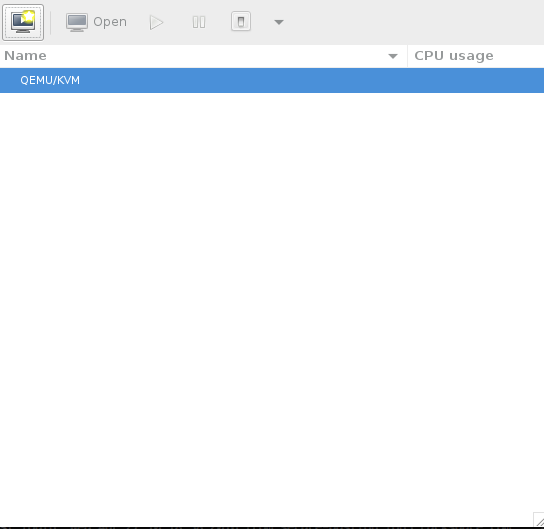

Running virt-manager for the first time

[root@on-metal-test-2 ~]# virt-manager

After running the above command you will see something like the image below. You’ll see an X window open on your local client machine, which is associated with an application running on the remote server your connected to via SSH. This is pretty damn cool.

Lets take this further and install firefox to demonstrate how awesome this is!

yum install firefox -y

Now we’re using firefox thru ssh, much better and more convenient to use X11 forwarding for this, than using a proxy for instance on the client configured with tunnel or vpn.

Nice!

Lets take it a bit further and start installing xen server with KVM. I am very tempted to use ZFS for this since onmetal v2 has 2 1600GB disks…

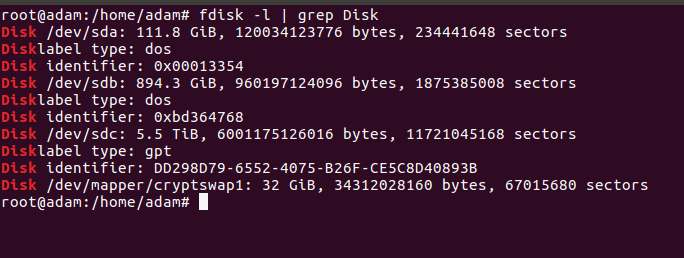

Create partitions for KVM store

fdisk -l

fdisk /dev/sdc

# type m , then type n, then type p, enter, enter, enter, enter, then type w

fdisk /dev/sdd

# type m , then type n, then type p, enter, enter, enter, enter, then type w

Create filesystem for KVM store

[root@on-metal-test-2 ~]# mkfs.ext3 /dev/sdc1 && mkfs.ext3 /dev/sdd1

mke2fs 1.42.9 (28-Dec-2013)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

97656832 inodes, 390624640 blocks

19531232 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

11921 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

mke2fs 1.42.9 (28-Dec-2013)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

97656832 inodes, 390624640 blocks

19531232 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

11921 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Now we have created the filesystem. What about creating the ZFS partition. To do this we need to go thru a fairly laborious process (at least if you don’t know what your doing). As I discovered my yum installation wasn’t automatically providing the correct devel source for the kernel to use the ZFS DKMS module. As ZFS is really a native BSD package.

One of the problems I had was this

Loading new spl-0.6.5.6 DKMS files...

Building for 3.10.0-327.10.1.el7.x86_64

Module build for kernel 3.10.0-327.10.1.el7.x86_64 was skipped since the

kernel source for this kernel does not seem to be installed.

Installing : zfs-dkms-0.6.5.6-1.el7.centos.noarch 4/6

Loading new zfs-0.6.5.6 DKMS files...

Building for 3.10.0-327.10.1.el7.x86_64

Module build for kernel 3.10.0-327.10.1.el7.x86_64 was skipped since the

kernel source for this kernel does not seem to be installed.

This can be checked out in more detail by running an;

yum search --show-duplicates kernel-devel

# and

rpm -qa | grep kernel

This gave me the right version of the devel kernel I needed to install ZFS to my current kernel with a module, as opposed to completely recompiling the whole thing. Nice!

Install ZFS and kernel devel

sudo yum localinstall --nogpgcheck http://archive.zfsonlinux.org/epel/zfs-release.el7.noarch.rpm

sudo yum install epel-release

sudo yum install zfs kernel-devel-3.10.0-327.10.1.el7.x86_64

Enable ZFS

[root@on-metal-test-2 adam]# /sbin/modprobe zfs

Create the 2 disk mirror using ZFS

[root@on-metal-test-2 adam]# zpool create -f kvmstore mirror sdc1 sdd1

Check KVM store disk

[root@on-metal-test-2 adam]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md126p1 220G 2.3G 209G 2% /

devtmpfs 63G 0 63G 0% /dev

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 63G 26M 63G 1% /run

tmpfs 63G 0 63G 0% /sys/fs/cgroup

tmpfs 13G 4.0K 13G 1% /run/user/0

kvmstore 1.5T 0 1.5T 0% /kvmstore

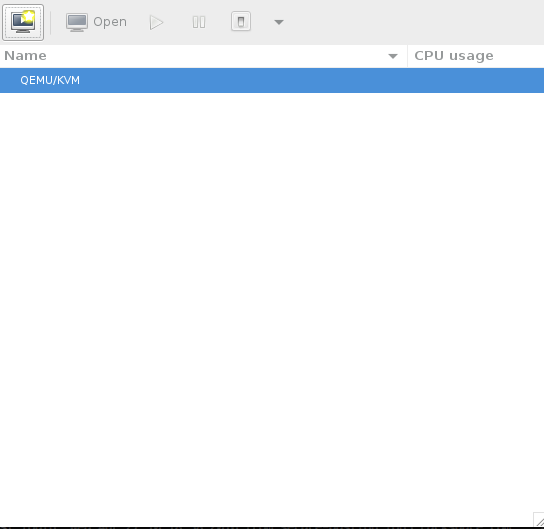

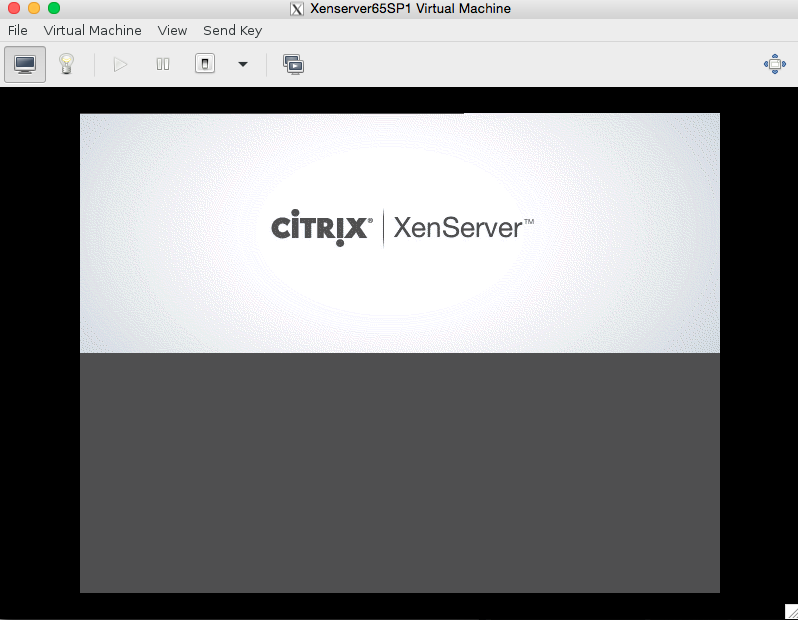

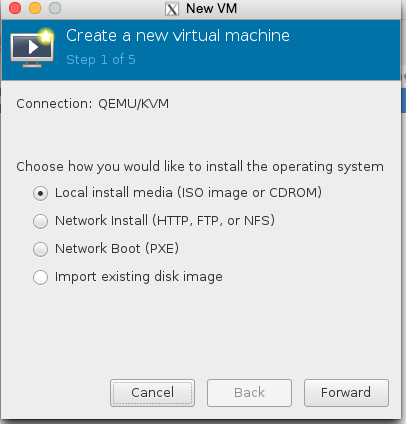

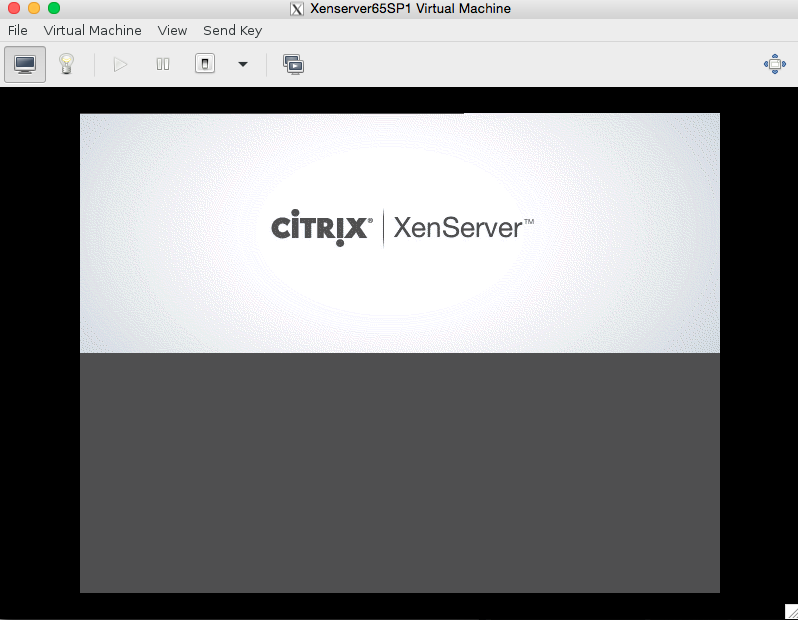

Run Virt manager to create Xenserver VM

Now we’ve created our partition and filesystem and configured ZFS we can run the virtual machines off the new kvm partition store. simples

Click top left icon on corner to create new VM

Download the Xenserver ISO to /root of hypervisor

root@on-metal-test-2 ~]# wget http://downloadns.citrix.com.edgesuite.net/10175/XenServer-6.5.0-xenserver.org-install-cd.iso

--2016-04-27 10:29:22-- http://downloadns.citrix.com.edgesuite.net/10175/XenServer-6.5.0-xenserver.org-install-cd.iso

Resolving downloadns.citrix.com.edgesuite.net (downloadns.citrix.com.edgesuite.net)... 104.86.110.32, 104.86.110.49

Connecting to downloadns.citrix.com.edgesuite.net (downloadns.citrix.com.edgesuite.net)|104.86.110.32|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 603744256 (576M) [application/octet-stream]

Saving to: ‘XenServer-6.5.0-xenserver.org-install-cd.iso’

100%[====================================================================================================================================>] 603,744,256 17.6MB/s in 38s

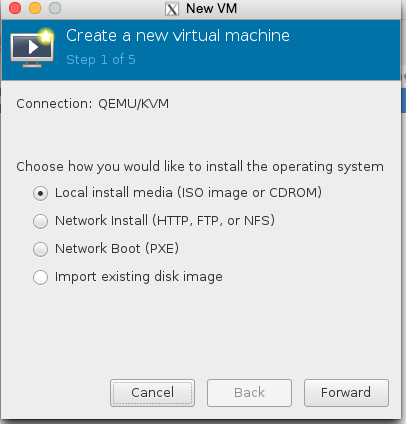

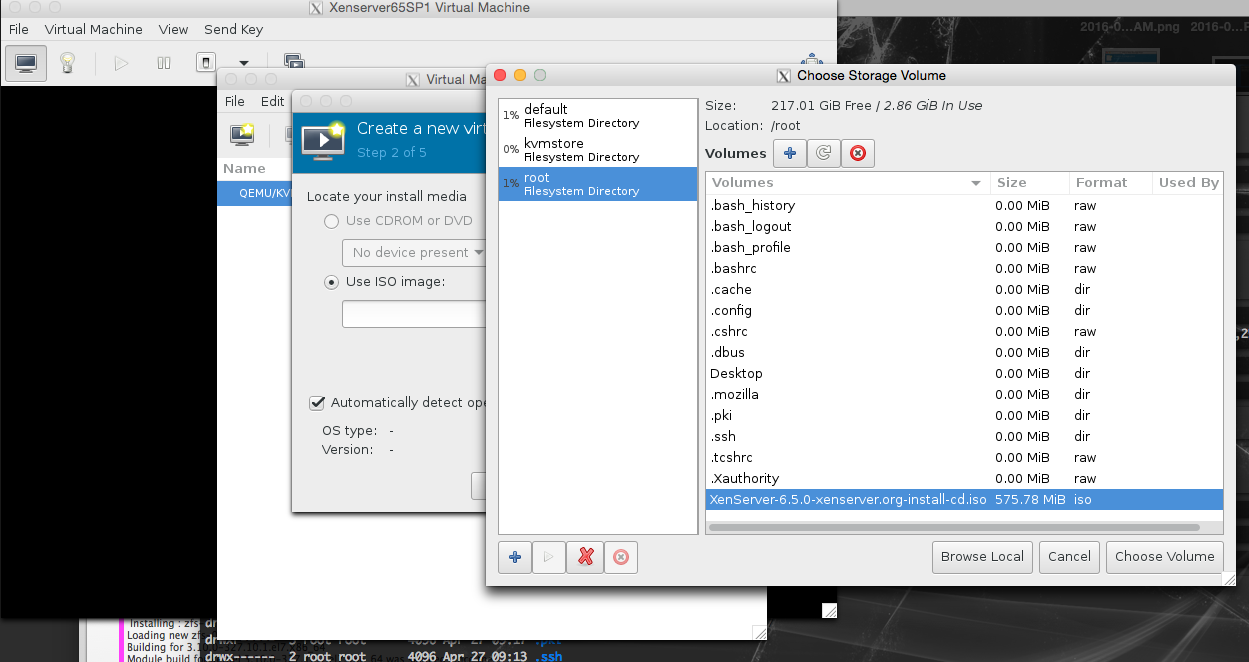

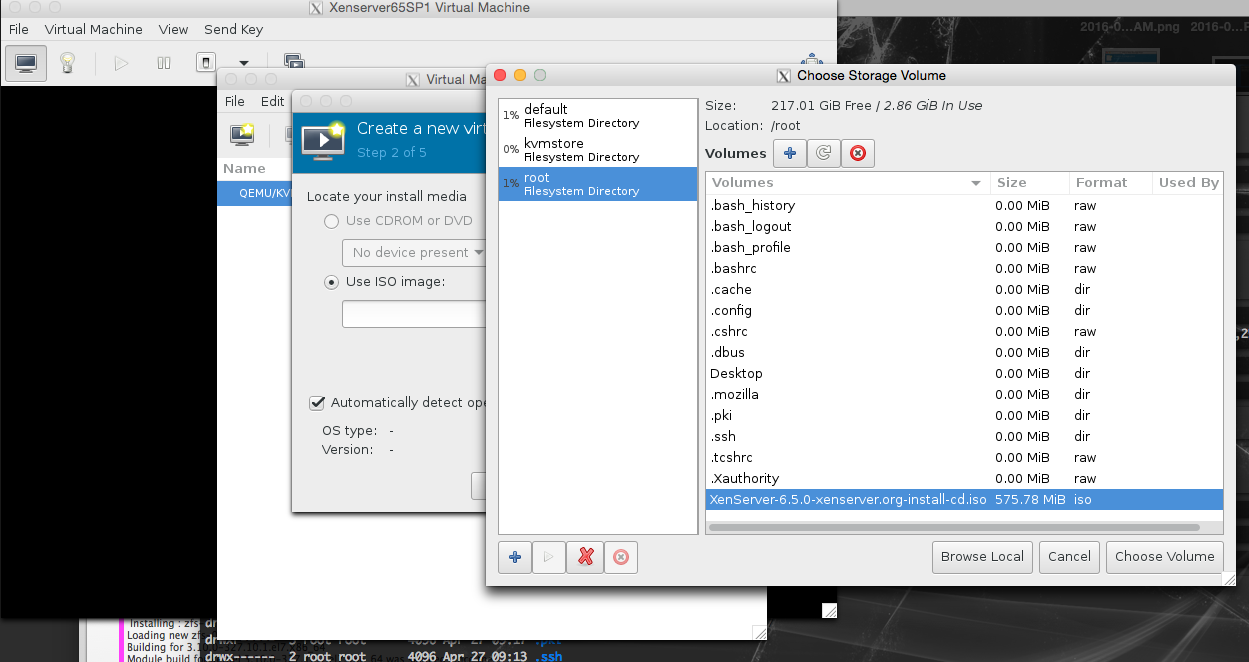

Select Local Media (we’re going to use a Xenserver ISO)

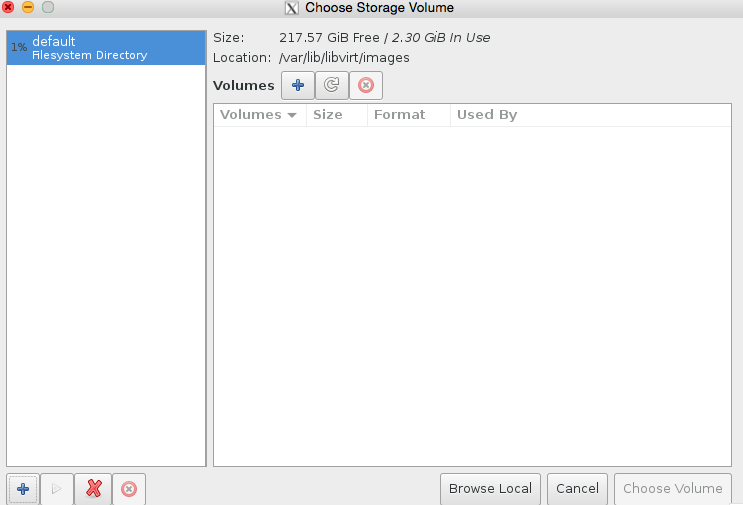

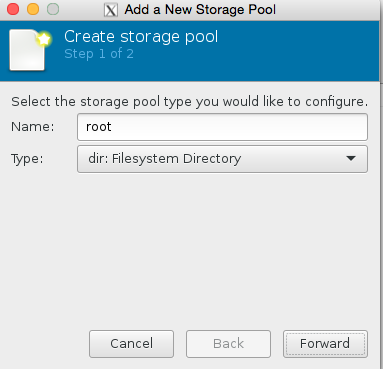

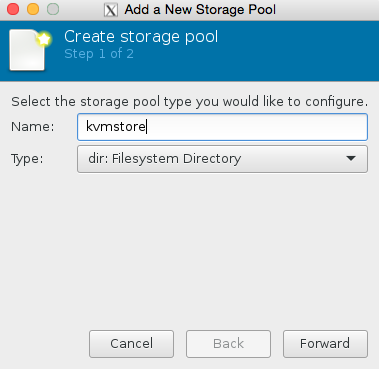

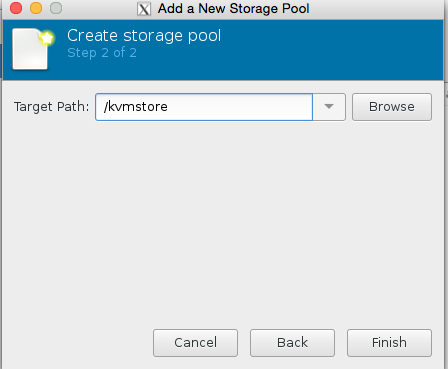

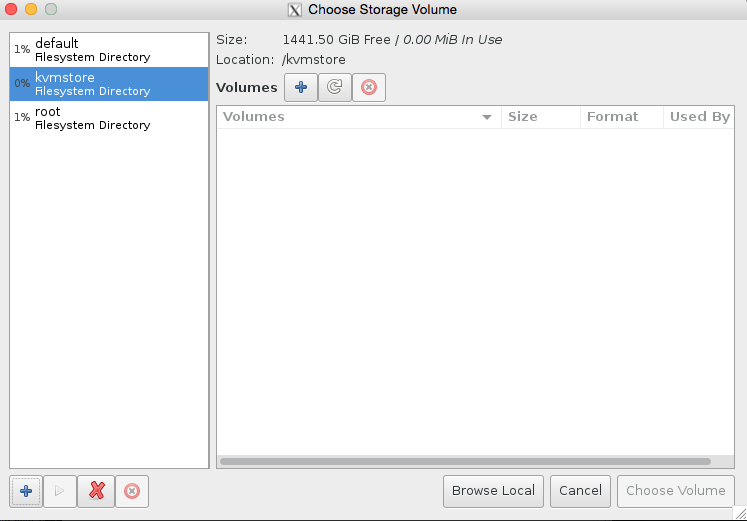

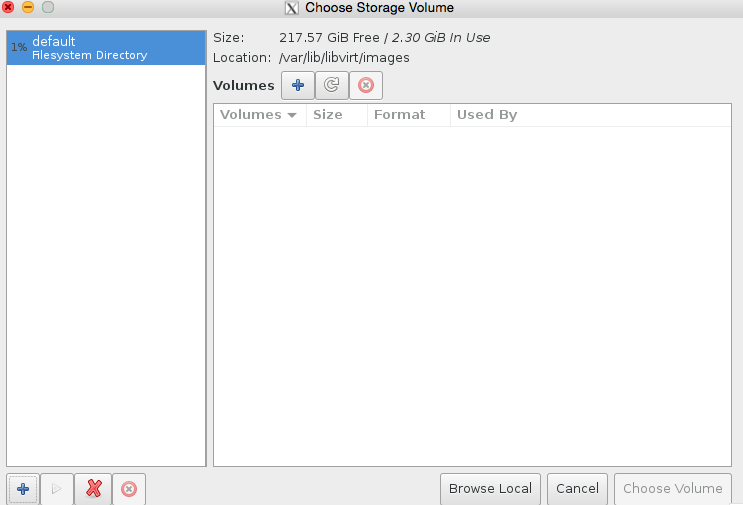

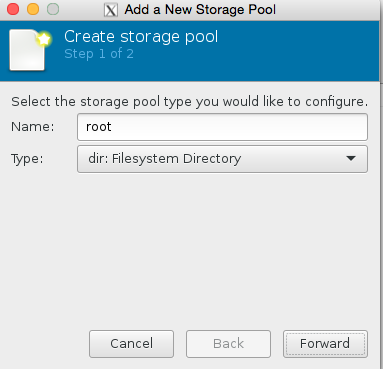

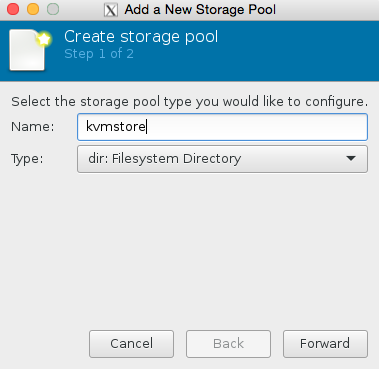

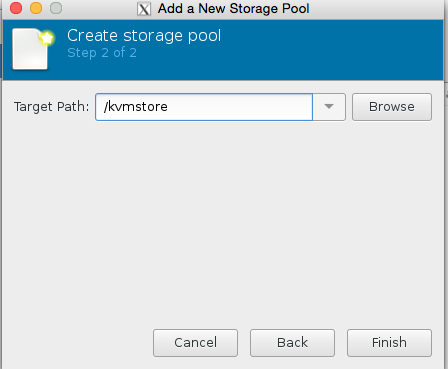

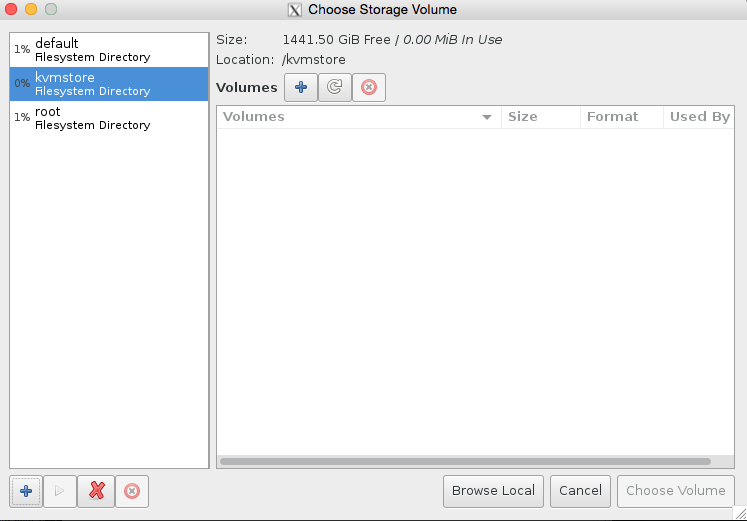

Click browse, then press the bottom left + icon to add some pools. We’re going to add /root which has our iso in it, and we’re also going to add kvmstore aswell.

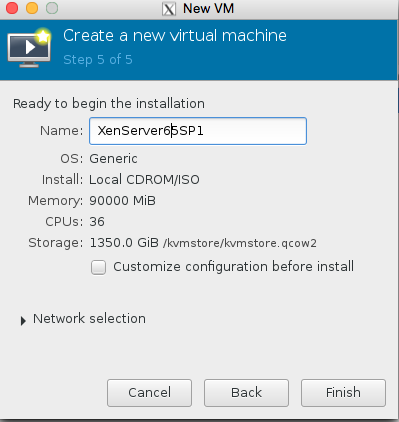

Congratulations you have now added the stores. Now all we need to do is finish configuring the VM.

We want to select the root partition now we have set up the pool, and choose the xenserver iso we just recently downloaded.

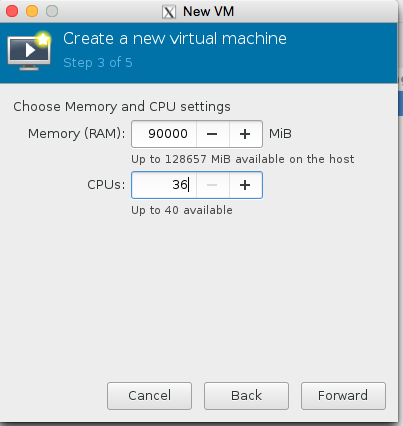

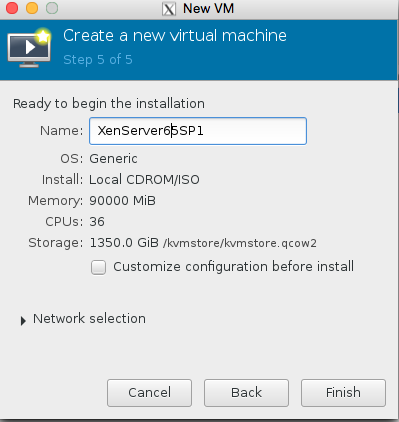

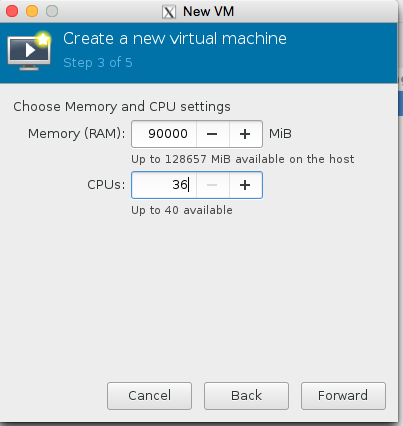

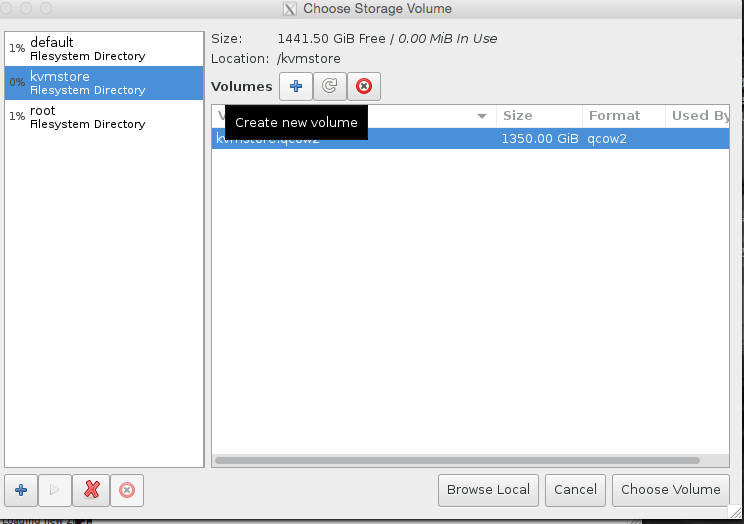

We are almost there now! Lets set the number of cpu and ram! Also lets make sure we use the kvmstore we just setup instead of the ‘main disk’ of the server.

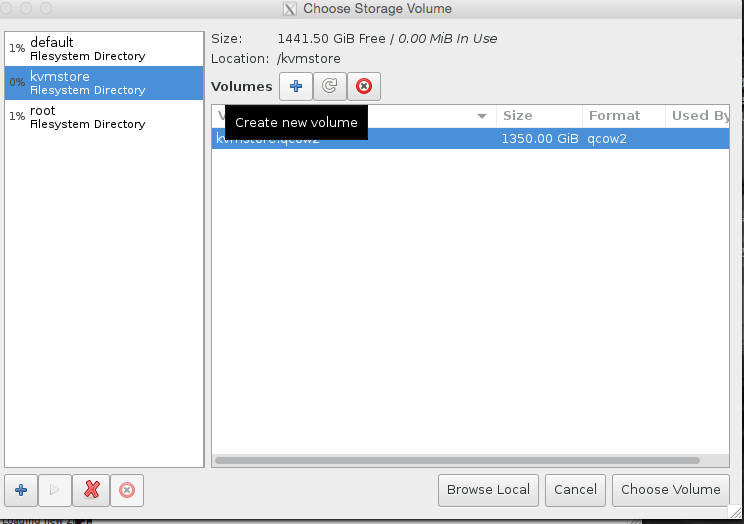

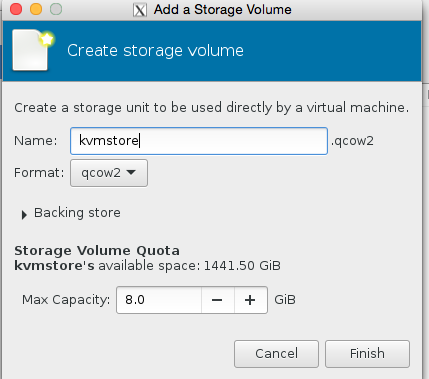

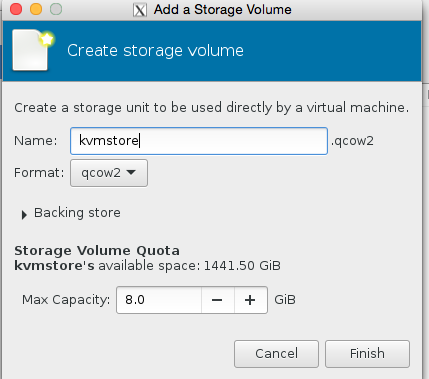

Select our KVM store ‘pool’ on the left hand side, and then press + to add the kvmstore.qcow2 volume, see the images for illustration.

click choose volume at the bottom left to confirm! And finally name the server

awwww crap , we got this error because the libvirtd kvm configuration isnt running as root

This can be quickly resolved by editing the /etc/libvirt/qemu.conf and making sure user = “root” and group = “root” are present.

Job done!