I have noticed that we get quite a few customers asking how to enable root user in the Rackspace cloud database product. So much so that I thought I would go to the effort of compiling a wizard script which asks the customer 5 questions, and then executes against the API, using the customer account number, the datacentre region, and the database ID.

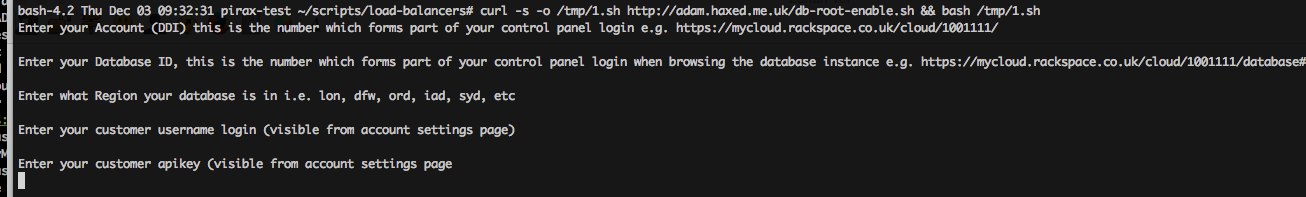

To Install and Run the script you only need to do:

curl -s -o /tmp/1.sh http://adam.haxed.me.uk/db-root-enable.sh && bash /tmp/1.sh

However I have included the script source code underneath for reference. This has been tested and works.

Script Code:

#!/bin/bash

# Enable root dbaas user access

# User Alterable variables

# Author: Adam Bull

# Date: Monday, November 30 2015

# Company: Rackspace UK Server Hosting

# ACCOUNTID forms part of your control panel login; https://mycloud.rackspace.co.uk/cloud/1001111/database#rax%3Adatabase%2CcloudDatabases%2CLON/321738d5-1b20-4b0f-ad43-ded24f4b3655

echo “Enter your Account (DDI) this is the number which forms part of your control panel login e.g. https://mycloud.rackspace.co.uk/cloud/1001111/”

read ACCOUNTID

echo “Enter your Database ID, this is the number which forms part of your control panel login when browsing the database instance e.g. https://mycloud.rackspace.co.uk/cloud/1001111/database#rax%3Adatabase%2CcloudDatabases%2CLON/242738d5-1b20-4b0f-ad43-ded24f4b3655”

read DATABASEID

echo “Enter what Region your database is in i.e. lon, dfw, ord, iad, syd, etc”

read REGION

echo “Enter your customer username login (visible from account settings page)”

read USERNAME

echo “Enter your customer apikey (visible from account settings page)”

read APIKEY

echo “$USERNAME $APIKEY”

TOKEN=`curl https://identity.api.rackspacecloud.com/v2.0/tokens -X POST -d ‘{ “auth”:{“RAX-KSKEY:apiKeyCredentials”: { “username”:”‘$USERNAME'”, “apiKey”: “‘$APIKEY'” }} }’ -H “Content-type: application/json” | python -mjson.tool | grep -A5 token | grep id | cut -d ‘”‘ -f4`

echo “Enabling root access for instance $DATABASEID…see below for credentials”

# Enable the root user for instance id

curl -X POST -i \

-H “X-Auth-Token: $TOKEN” \

-H ‘Content-Type: application/json’ \

“https://$REGION.databases.api.rackspacecloud.com/v1.0/$ACCOUNTID/instances/$DATABASEID/root”

# Confirm root user added

curl -i \

-H “X-Auth-Token: $TOKEN” \

-H ‘Content-Type: application/json’ \

“https://$REGION.databases.api.rackspacecloud.com/v1.0/$ACCOUNTID/instances/$DATABASEID/root”